Use local video overlay to make it feel faster and with mirrored display

Eyeson video meetings are based on the MCU+ Single-Stream Technology that provides many advantages like "everyone sees the same at the same time", it lets you create virtual rooms with its layout and layer features and much more.

But this also means, that you see yourself like all other participants see you. And unfortunately, this is not what you are used to. In other meeting tools your local video is usually shown instantly and mirrored based on the following trick:

Since most of the other meeting tools are based on SFU architecture, they will show your local video next to the other participants' videos. The local video that you see on your screen has no delay, because it shows the video directly from your webcam. Whereas all other participants' videos have a delay, because they have been sent over the internet.

Eyeson is based on the MCU achitecture, so you see your local video after it has been sent over the internet, which brings many advantages like layout and overlay features, but adds a noticible delay.

The basis for the trick

With EyesonJS on your custom UI, you can apply the same trick with the local video to give your customers a more instant feeling.

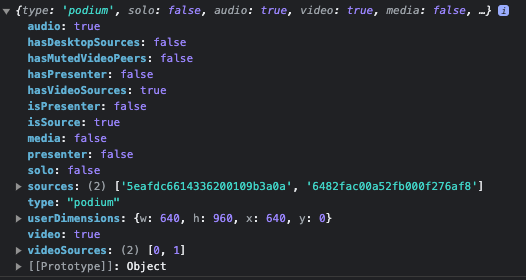

The "Podium" event includes the viewbox

of the current user on the remote stream, called userDimensions:

Use this data to place the localStream video above the remote video stream.

If using the trick "local video overlay", make sure to avoid the layer feature, because otherwise, the local overlay would hide away parts or even the entire overlay!

The userDimensions object contains

w...width,h...height,x...left pixel offset, andy...top pixel offset

Offsets and dimensions are relative to the eyeson video resolution of either 1280x960 pixels (960p in default) or 1280x720 pixels (HD in widescreen mode).

Show me the code (MCU only)

Here's our suggestion how to calculate the position and size of the local video overlay:

import { debounce } from '@eyeson/js';

let _remoteVideoPosition = null;

let _userDimensions = null;

const _streamSize = {

width: 1280,

height: 960,

};

const _videoContainer = document.querySelector('#main-video');

const _videoLocal = document.querySelector('#video-local');

const onEyesonEvent = event => {

const { type } = event;

if (type === 'accept') {

_videoLocal.srcObject = event.localStream;

calculateRemoteVideoPosition();

}

if (type === 'room_ready') {

_streamSize.height = event.content.options.widescreen ? 720 : 960;

}

else if (type === 'stream_update') {

if (event.localStream) {

_videoLocal.srcObject = event.localStream;

}

}

else if (type === 'podium') {

_userDimensions = event.userDimensions;

updateOverlay();

}

};

const calculateRemoteVideoPosition = () => {

const bbox = _videoContainer.getBoundingClientRect();

const videoRatio = _streamSize.width / _streamSize.height;

const ratio = bbox.width / bbox.height;

const position = { x: 0, y: 0, width: 0, height: 0 };

if (ratio > videoRatio) { // more width

position.y = 0;

position.height = bbox.height;

position.width = position.height * videoRatio;

position.x = (bbox.width - position.width) / 2;

}

else if (ratio < videoRatio) { // more height

position.x = 0;

position.width = bbox.width;

position.height = position.width * (_streamSize.height / _streamSize.width);

position.y = (bbox.height - position.height) / 2;

}

else { // same size

position.x = 0;

position.y = 0;

position.width = bbox.width;

position.height = bbox.height;

}

_remoteVideoPosition = position;

};

const updateOverlay = () => {

if (_remoteVideoPosition && _userDimensions) {

const widthRatio = _remoteVideoPosition.width / _streamSize.width;

const heightRatio = _remoteVideoPosition.height / _streamSize.height;

const position = {

top: `${_userDimensions.y * widthRatio + _remoteVideoPosition.y}px`,

left: `${_userDimensions.x * heightRatio + _remoteVideoPosition.x}px`,

width: `${_userDimensions.w * widthRatio}px`,

height: `${_userDimensions.h * heightRatio}px`,

};

Object.assign(_videoLocal.style, position);

}

};

const joinMeeting = accessKey => {

_eyeson.onEvent(onEyesonEvent);

_eyeson.start(accessKey, { audio: true, video: true });

};

new ResizeObserver(debounce(() => {

calculateRemoteVideoPosition();

updateOverlay();

}, 300)).observe(_videoContainer);

The CSS part is defined as following. Note the object-fit: cover definition to

zoom the webcam.

#main-video {

position: relative;

}

#main-video #video-local {

position: absolute;

top: 0;

left: 0;

object-fit: cover;

}

The example code above only works with options[sfu_mode]=disabled, so that

there's always only the MCU remote video stream!

See Eyeson API documentation.

Adjustments for SFU mode

If SFU mode is enabled, it get's much more complex. Now we have to consider all of the following cases:

- Only one participant with or without camera

- 2 participants in SFU mode, each with or without camera

- Presentation switches to SFU mode, self or other presenter

- More than 2 participants (MCU mode), camera on or off and visible or not visible

With this in mind, the methods need to be updated.

- There's a new state "solo", "sfu" or "mcu"

- New class "isPresenter" to display the correct video and turn off mirror effect

- Class "camOff" if the local webcam is turned off

- Class "videoOff" if webcam is not visible on the remote video stream

const onEyesonEvent = event => {

const { type } = event;

if (type === 'accept') {

document.body.dataset.visible = 'mcu';

// ...

calculateRemoteVideoPosition();

}

else if (type === 'remote_description_update') {

_sfuMode = event.update.sfu;

updateOverlay();

}

else if (type === 'podium') {

const videoOff = !event.video || (event.hasPresenter && !event.isPresenter);

document.body.classList.toggle('videoOff', videoOff);

document.body.classList.toggle('isPresenter', event.isPresenter);

_solo = event.solo;

_hasMutedVideoPeers = event.hasMutedVideoPeers;

_userDimensions = event.userDimensions;

_isPresenter = event.isPresenter;

updateOverlay();

}

// ...

};

const updateOverlay = () => {

document.body.dataset.visible = (_solo || _isPresenter || (_sfuMode && _hasMutedVideoPeers)) ? 'solo' : _sfuMode && !_hasMutedVideoPeers ? 'sfu' : 'mcu';

let position = null;

if (_isPresenter) { // full size

position = {

top: 0,

left: 0,

width: '100%',

height: '100%',

};

}

else if (_solo || (_sfuMode && !_hasMutedVideoPeers)) { // small preview

position = {

top: 'auto',

left: 'auto',

width: '200px',

height: 'auto',

};

} else if (_userDimensions && _remoteVideoPosition) { // calculated size and position

const widthRatio = _remoteVideoPosition.width / _streamSize.width;

const heightRatio = _remoteVideoPosition.height / _streamSize.height;

position = {

top: `${_userDimensions.y * widthRatio + _remoteVideoPosition.y}px`,

left: `${_userDimensions.x * heightRatio + _remoteVideoPosition.x}px`,

width: `${_userDimensions.w * widthRatio}px`,

height: `${_userDimensions.h * heightRatio}px`,

};

}

if (position) {

Object.assign(_videoLocal.style, position);

}

};

The CSS definitions are adjusted as follows:

#main-video {

position: relative;

}

#main-video #video-local {

position: absolute;

top: 0;

left: 0;

width: 200px;

object-fit: cover;

}

body.isPresenter #main-video #video-local {

object-fit: contain;

}

body[data-visible="solo"] #video-local,

body[data-visible="sfu"] #video-local {

right: 0;

bottom: 1rem;

}

body[data-visible="solo"] #video-remote,

body.videoOff #video-local,

body.camOff #video-local {

display: none;

}

Mirror effect for local webcam

Since we are using a seperate video element, we can easily mirror the webcam video by assigning the following CSS:

body:not(.isPresenter) #video-local.mirror {

-moz-transform: scaleX(-1);

-o-transform: scaleX(-1);

-webkit-transform: scaleX(-1);

transform: scaleX(-1);

filter: FlipH;

-ms-filter: "FlipH";

}

Note that it is not applying the mirror effect during presentation, because only yourself should be displayed like in a mirror.

Download code example

We've prepared a full working example that contains the code shown in the description above.

Download eyeson-overlay-example.zip