Using SpeechRecognition via Browser

With no doubt JavaScript has become an elementary driving force for the Internet resulting in incredibly fast development and great success for the web. A lot of new and interesting JavaScript WebAPIs have become available and many of them are supported by major browsers. In this blog post we'll explore the Web Speech API to be used for speech recognition.

Progressive enhancement

The speech API is still experimental and may not be available in every user agent or at least not fully working. A common approach for modern websites and web applications is to progressively enhance the user experience, hence use what is available to the user. This design principle is known as progressive enhancement. If you create a web application or a website your own, I strongly recommend to start with implementing a smaller core feature set that supports a wide range of user agents. Then optimize and enhance the user experince using newer technology, keeping a fallback ensured.

In the Eyeson JavaScript library we collect requirements in a single feature detector interface in order to decide whether a user agent can handle a certain functionality or not. As an example, at the time of this writing Google Chrome requires an extension to handle screen capturing, whereas Mozilla Firefox supports a handy interface to access this functionality. Other user agents won't have this capability at all. Therefore the feature detector queries for available API's, asks for the installed extension and tries to figure out the best setup possible for the end user.

Back to the Web Speech API, it provides two working modes: Handling Text-to-Speech and SpeechRecognition. We'll skip the first and build a simple and handy extension for eyeson.team to control a video conference web GUI with speech input.

Speak My Language

When it comes to speech synthesis it is essential that the language dealt with

is known beforehand. In terms of accessibility a document level language

definition is essential, as assistive technologies using

text-to-speech may fallback on the users default language. Fortunately this is

as simple as setting the lang= attribute in your opening html-tag, you don't

want your visitor from France listening to a french dialect interpreting your

content in English.

In our case we don't want to concentrate too much on the content but the input

via the users voice. The API provides us with a configuration to set the users

language, if none provided will use the HTML documents lang attribute. In most

cases this will be fine, there's definitely room for improvement here. Having

British user dealing with language settings en-GB instead of en-US will

result in a surprisingly better matches and therefore a better user experience.

Interim Results, Grammar and other Options

Although speech recognition is quite a complex topic, the current specification has a short and powerful set of properties. Let's have a short look at some of the setting options provided.

- continuous

- Switches the recognition mode between a continuous listening and recognition service and a single listen-recognize stop and go mode. In our use case we want to constantly listen for user commands. In other scenarios you might want to start recognition by some other preceeding user interaction.

- interimResults

- Interim results provide you with quicker interpretations, kind of a

current state of the words captured. Although this is a very interesting

output, it might be a better fit for development, research and optimization

environments than a production system. In case you want to get a better

understanding of how your controls are interpreted set this option to true,

you can still control if a result is to be used by checking its

isFinalproperty. - maxAlternatives

- If you want to provide your user with some additional interactive selection to correct a faulty recognition this setting comes in handy. Note that the default value is set to one.

- Grammars

- Using JSpeech grammar format, a simple and readable plain textual format, the words recognized can be limited on specific words. In our code example we should definitely reduce our controls to the grammar required - the input commands we provide. However to a bit more more fun with this playground and to check out how much of our spoken words gets recognized we skip this step.

When to Use

With popular voice recognition assistants like Alexa, Siri, or OK Google your users might already be used to speech control. The use of this technology can improve accessibility to a wide range of your users significantly. Additionally there are scenarios where there's simply no other input mechanism available, think of a remote support using a headset requiring both hands to be free in order to get a job done.

If you do provide voice control, ensure your user is aware of it and what commands are available.

Coding Example

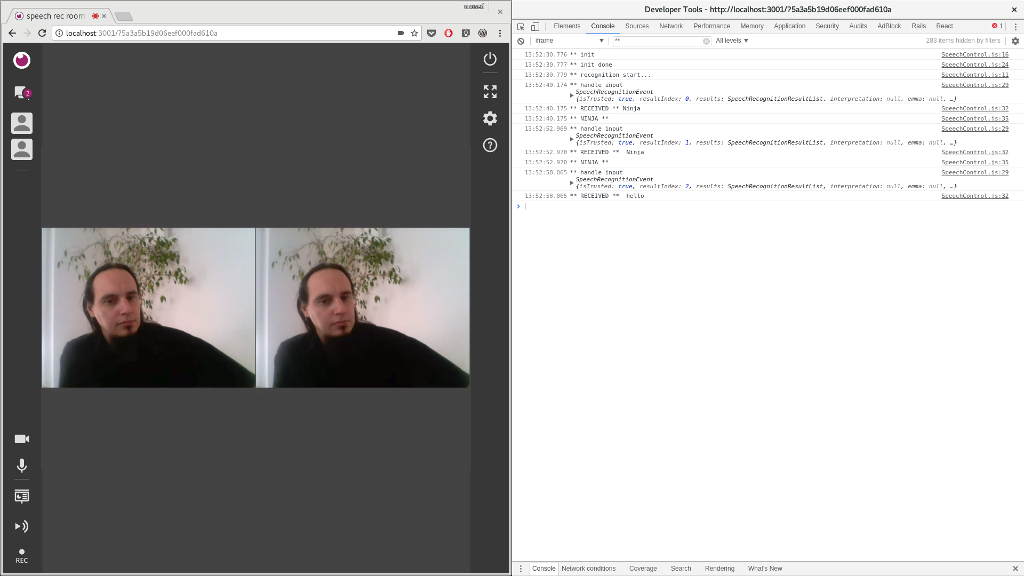

The following code snippets provides a full working example that can be used

to extend the [eyeson.com][www.eyeson.com] video conferencing web app

with speech recognition voice control. The Eyeson JavaScript library uses

event handling to interact with the video communications system, providing

a simple interface for developers. We'll use the toogle_video event in order

to mute our camera and make it active again on voice command ninja (ref).

As described we try to use any SpeechRecognition inside the initialization method, or fallback on not sticking any further with this feature. If we successfully detect the API to be available, we configure to skip interim results, stick with one result and provide a continuous recognition.

We use onresult to attach an input handler, and start listening. Within a

received result, we're just interested on the transcript interpreted by our

recognition - a received 'ninja' will get our attention and the input handler

receives the video toggle event, switching between mute and unmute state.

/**

* Handle speech input.

**/

class SpeechControl {

constructor(handler) {

this.handler = handler;

if (this.init()) {

this.recognition.onresult = this.handleInput.bind(this);

console.debug('recognition start...');

this.recognition.start();

}

}

init() {

this.recognition = new (window.SpeechRecognition ||

window.webkitSpeechRecognition || window.mozSpeechRecognition ||

window.oSpeechRecognition || window.msSpeechRecognition)();

if (!this.recognition) {

return false;

}

this.recognition.interimResults = false;

this.recognition.maxAlternatives = 1;

this.recognition.continuous = true;

return true;

}

handleInput(event) {

const sentence = event.results[event.results.length - 1][0];

console.debug('** RECEIVED **', sentence.transcript);

if (sentence.transcript.toLowerCase().trim() === 'ninja') {

console.debug('** NINJA **');

this.handler && this.handler({ type: 'toggle_video' });

}

}

}

export default SpeechControl;

Browser Support

Note that the speech recognition web API is fully available only in Google Chrome. A specification draft version is created and published for W3C. Firefox and Opera web browser ship with the support as well, but require manual change of configuration flags in order to enable the feature.

Conclusion

Although speech recognition might not have full support for every user agent, it might be available for users who want or even need to use it. This powerful but easy to handle control mechanism might give your website or web application a head start to the upcoming trend of interacting with software via voice.