Using Ghost to Create a RTMP-to-webrtc Proxy

Integrating streams from drones or body-cams into video meetings is a nice-to-have. Since lots of devices of this kind provide their stream via RTMP bridging RTMP to webrtc is the task to solve.

With ghost we provide a go-based webrtc client which connects to an Eyeson meeting as a client and allows to stream in and out of that meeting. This client can be easily combined with a go RTMP server to achieve this.

Transcode vs Passthrough

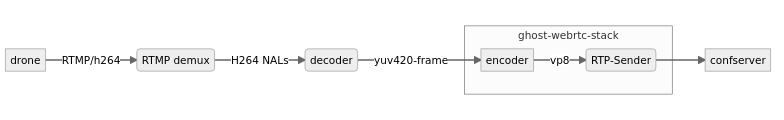

One could create a RTMP receiver, get the H264 NALs from the video packets, run them through the decoder and use the frames to feed the webrtc stack. The stack then would recode and adapt bit- and framerate to its needs.

The main disadvantage is the expected high CPU load for running the decode/encode.

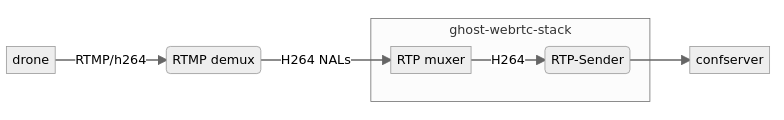

The other approach would be just forwarding the H264-NALs, muxing them into RTP-packets according to the webrtc specs. So the packets video payloads are just passed through to ghost (i.e. the webrtc-stack).

The main advantage is low CPU usage, the main disadvantage the decreased flexibility concerning bandwidth management. The latter is caused by the fact that bitrate and framerate are controlled by the sender (i.e. the drone) but no direct feedback channel exists (the backpressure of the RTMP-TCP connection is the only indicator for the sender but only "measures" the leg drone ↔ RTMP server).

We have chosen the passthrough approach because of the following reasons:

- Fast, so could be run on weak clients (e.g. raspberry pi)

- Network leg drone ↔ RTMP expected to have less bandwidth than ghost ↔ confserver, so bandwidth management is of less importance.

Implementation

The full program can be found in the ghosts example

directory. Ghost uses the pion webrtc stack which is

a pure go implementation of the webrtc specs. All which is required for using ghost is

an Eyeson API_KEY.

The following example creates a new meeting and joins with the ghost client for 20 seconds. One can click on the guest-link which is printed to stdout to join as guest. One will see an other participant with an empty video, disappearing after 20s.

package main

import (

"fmt"

"os"

"github.com/eyeson-team/eyeson-go"

"github.com/eyeson-team/ghost/v2"

)

func main() {

// create a new meeting

client := eyeson.NewClient(os.Getenv("API_KEY"))

room, err := client.Rooms.Join("standup meeting", "mike", nil)

if err != nil {

panic("Failed to join room")

}

fmt.Println("Guest users join here: ", room.Data.Links.GuestJoin)

// wait until meeting is ready

err = room.WaitReady()

// enter with ghost as client

eyesonClient, err := ghost.NewClient(room.Data,

ghost.WithForceH264Codec(),

ghost.WithSendOnly())

if err != nil {

panic("Failed to create eyeson-client")

}

defer eyesonClient.Destroy()

if err := eyesonClient.Call(); err != nil {

panic("Failed to call")

}

time.Sleep(20 * time.Second)

eyesonClient.TerminateCall()

}

Next step is to start a RTMP server and inject that video via the ghost client. For the RTMP part we used the go libs gortsplib and rtmp.

What can be seen in the code-listing below is how the rtmp-server is setup and

handles one connection at a time.

The packet muxing happens in the rtmpServer.HandleConn. The thing to take

care of are the two SPS and PPS NAL types (More on NALs and parameter sets can be found

here in section Parameter Sets). Those carry vital configuration

and initialisation information which the decoder on the receiving side requires.

Without SPS and PPS info the receiver can't decode the NALs. Those are appended

to the set of NALs in case of a keyframe.

h264Encoder.Encode encodes the NALs into rtp-packets. And finally, videoTrack.WriteRTP

writes the packets to the sender socket of the pion stack.

import (

"github.com/aler9/gortsplib/pkg/h264"

"github.com/aler9/gortsplib/pkg/rtph264"

"github.com/notedit/rtmp/av"

rtmph264 "github.com/notedit/rtmp/codec/h264"

"github.com/notedit/rtmp/format/rtmp"

)

func setupRtmpServer(videoTrack ghost.RTPWriter) {

rtmpServer := rtmp.NewServer()

var err error

var lis net.Listener

if lis, err = net.Listen("tcp", "127.0.0.1:1935"); err != nil {

return

}

// Init the rtph264-rtp-header-encoder only once,

// and reuse if another rtmp-client connects.

h264Encoder := rtph264.Encoder{

PayloadType: 96,

PayloadMaxSize: 1200,

}

h264Encoder.Init()

rtmpServer.HandleConn = func(c *rtmp.Conn, nc net.Conn) {

log.Println("New rtmp-conn created")

sps := []byte{}

pps := []byte{}

for {

packet, err := c.ReadPacket()

if err != nil {

log.Println("Failed to read packet:", err)

return

}

switch packet.Type {

case av.H264DecoderConfig:

// read SPS and PPS and save them so those can be

// prepended to each keyframe.

// A different solution would be to signal the sprops via sdp.

// But this would require to start the call _after_ the

// rtmp-client is connected.

codec, err := rtmph264.FromDecoderConfig(packet.Data)

if err != nil {

log.Fatalf("Failed to decode decoder-config:", err)

}

if len(codec.SPS) > 0 {

sps = codec.SPS[0]

}

if len(codec.PPS) > 0 {

pps = codec.PPS[0]

}

case av.H264:

// rtmp h264 packet uses AVCC bit-stream

// extract nalus from that bitstream

nalus, err := h264.AVCCDecode(packet.Data)

if err != nil {

log.Fatalf("Failed to decode packet:", err)

}

// only prepend keyframes with sps and pps

if packet.IsKeyFrame {

nalus = append(nalus, sps)

nalus = append(nalus, pps)

}

// convert nalus to rtp-packets

pkts, err := h264Encoder.Encode(nalus, packet.Time)

if err != nil {

log.Fatalf("error while encoding H264: %v", err)

}

for _, pkt := range pkts {

err = videoTrack.WriteRTP(pkt)

if err != nil {

log.Printf("Failed to write h264 sample: %s", err)

return

}

}

}

}

}

// will handle clients sequentially

for {

nc, err := lis.Accept()

if err != nil {

time.Sleep(time.Second)

continue

}

log.Println("New Client connected")

rtmpServer.HandleNetConn(nc)

}

}

In order to link rtmp server and ghost together we set them up via the ghost connection handler:

eyesonClient.SetConnectedHandler(func(connected bool, localVideoTrack ghost.RTPWriter,

localAudioTrack ghost.RTPWriter) {

go setupRtmpServer(localVideoTrack)

})

Notes

As said before, no direct feedback loop exists. Therefore one can't request a keyframe in case of one got missed. Therefore, using a small keyframe intervall is recommended, if the client allows to.